Near-real time mapping of water bodies from satellite imagery plays a critical role in water management. Continuous monitoring of environmental change over time, like the estimation of water availability or prediction of floods and droughts, is essential to human activities such as agriculture, hydrology and water management—and Copernicus Sentinel-2 data are lending a hand.

Researchers from the SpaceTimeLab of University College London (UCL) and the Joint Research Centre (JRC) of the European Commission, recently carried out a study on Convolutional neural networks (CNN) for water segmentation, using Copernicus Sentinel-2 red, green, blue (RGB) composites and also the NIR (Near Infrared) and SWIR (Short Wavelength Infrared) bands derived spectral indices, e.g. stating derived spectral indices using all spectral bands, or visible and infrared spectral bands.

Their study aimed to determine if a single CNN based on RGB image classification can effectively segment water on a global scale and outperform traditional spectral methods. Additionally, the study evaluated the extent to which smaller datasets (of very complex pattern, e.g. harbour megacities) can be used to improve globally applicable CNNs within a specific region.

Data from the Sentinel-2 satellites of the European Union’s Copernicus Programme were used. The mission comprises a constellation of two polar-orbiting satellites placed in the same sun-synchronous orbit, phased at 180° to each other. It aims at monitoring variability in land surface conditions, and its wide swath width (290 km) and high revisit time (10 days at the equator with one satellite, and 5 days with two satellites under cloud-free conditions, which results in 2-3 days at mid-latitudes) support monitoring of Earth's surface changes.

Both satellites are equipped with filter-based push-broom imager multispectral (MSI) sensors. The bands at 10 m resolution are the blue (458 to 523 nm), green (543 to 578 nm), red (650 to 680 nm) and near-infrared (NIR) (785 to 900 nm).

The Sentinel hub application programming interface (API) was used to source all data used within this study. Sites were identified with the aid of the Google Earth imagery platform. Areas of heavily built-up and complex sea to land interfaces or locations, with densely packed diverse inland waterbodies, were selected.

In recent years, the expansive growth in the availability and capabilities of graphics processing units (GPUs) has driven the development of sophisticated deep learning (DL) architectures, and more specifically, convolutional neural networks (CNNs). Innovations in CNN architecture have enabled multiscale contextual detection of features within a scene (Chen et al. 2018a).

This has led to a surge of interest in state-of-the-art CNN applications to classify land with semantic segmentation (Hoeser and Kuenzer 2020; Tsagkatakis, et al., 2019). CNNs have been hugely successful when used on very high-resolution imagery (<1 m × pixel), with reported overall accuracy scores that exceed 99% (Talal, et al., 2018; Chen et al. 2018). CNNs have been less successful on medium resolution imagery, achieving segmentation results ranging from 84% to 97% overall accuracy (Isikdogan, Bovik, and Passalacqua 2017; Wang et al. 2020; Wieland and Martinis 2020). Medium resolution imagery contributes to the majority of land mapping activities, due to their typically higher spatial and temporal resolution, highlighting a need for further development within this field (Belward and Skoien 2015).

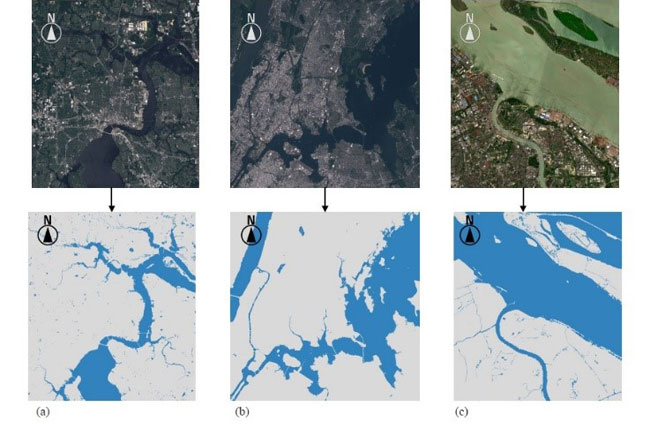

Three areas were delegated and preserved specifically as a benchmark for evaluating water mask predictions, and they were chosen because they represent a globally diverse range of water body typologies. The areas were not exposed to the CNN at any stage of the training process.

The first evaluation area was a 21.96 km × 19.52 km region covering Jacksonville, Florida. The area was chosen due to the extraordinary density of small lakes within the land, and the complex meandering inland river network. The second area was a 19.52 km × 19.52 km region covering New York. It was chosen primarily due to the densely-packed tall buildings with extensive shadowed regions. The third one chosen was a 21.96 km × 19.52 km region of the northern section of Shanghai City. This area enabled the model to be evaluated on a transient intertidal zone, with high levels of suspended sediment. Additionally, the area has a very high density of both large and small boats.

Transfer learning performance

During the initial training stages, the DeepLabV3_Global model was shown a globally diverse range of water body typologies. The characteristics of these water bodies are heavily influenced by interdependent variables, such as local geomorphology, weather patterns and human activities.

These variables are often homogenous to a region. As an example, Florida is characterised by a porous plateau of karst limestone, which allows water to move freely forming large wetlands and an extraordinary number of small lakes (Beck 1986).

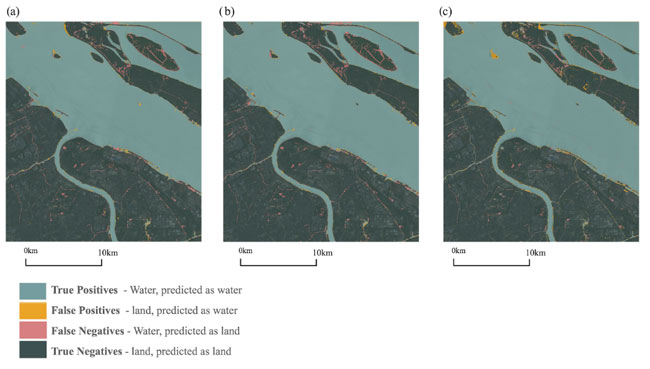

The DeepLabV3_Global model learned the features to predict water bodies at all three sites, based upon the generic characteristics of water bodies. Re-training the network with a small number of local samples reinforced the correct predications made by the DeepLabV3_Global model, while extracting characteristics that are specific to the local region and transferred the knowledge into the new network.

This was particularly successful when applied to the New York test site; large areas of forest were predicted as water by the DeepLabV3_Global model, but resolved in the DeepLabV3_New_York model prediction. It could be speculated that the DeepLabV3_Global model had fitted to the green-texture-rich water bodies in the Florida dataset and when knowledge was extracted and transferred to the DeepLabV3_New_York network, the error was eliminated.

In all test cases, the retraining of the networks resulted in some new errors that did not occur in the DeepLabV3_Global predictions. The most notable, unexpected error was the patch of water identified as land in the Florida test case.

Deep learning models are known to be robust to label noise that is evenly distributed across a large dataset, yet highly sensitive to label noise that is concentrated within the dataset (Karimi et al. 2020). Errors most likely arose from concentrated label noise within the smaller subsets of data. This noise would also be amplified in the augmentation process and transferred to the retrained network.

Conclusions

Better results could be achieved through a redesign of the CNN architecture, to better suit EO imagery. This could involve adjusting the dilation rates of the atrous convolution kernels to better suit the clustered nature of the water bodies.

Alternatively, the use of an encoder-decoder network like DeepLabV3+ has the potential to improve segmentation performance. The incorporation of additional skip connections from the entry and middle blocks of the DeepLabV3+ encoder has been shown to sharpen segmentation outputs (Prabha et al. 2020).

Experimenting with this technique could make it possible to detect and localise very small, narrow and complex water bodies. Some recent studies have swapped RGB input channels for alternatives (Jain, Schoen-Pelan, and Ross 2020). The performance of water segmentation with CNNs could be improved by replacing the RGB channels with band ratios or outputs of an existing spectral water index.

A further enhancement of the transfer learning aspect of this study could involve retraining DeepLabV3_Global to identify specific water typologies, rather than geographic locations. For example, re-training DeepLabV3_Global on images collected in areas of karst limestone, instead of samples limited to Florida. CNNs trained to capture the characteristics of specific typologies would enable broader usage than a CNN retrained specifically to geographic location.

This study has shown that CNNs are an effective tool for the segmentation of water bodies, in medium resolution satellite imagery. This was done by training the DeepLabV3-ResNet101 network with manually labelled Copernicus Sentinel-2 imagery.

Three main conclusions can be made based upon this research:

- CNNs can be applied to medium resolution true-colour satellite imagery, to effectively map water bodies on a global scale.

- Water segmentation using CNNs on medium resolution true colour satellite imagery can outperform multispectral water segmentation indices.

- Transfer learning with small geographically localised datasets can improve the performance of CNN water segmentation, in specific geographic regions.

Further developments of the study could include adjusting the network, to improve segmentation sharpness and feature localisation in EO imagery. Results could be improved by replacing the RGB input channels with alternatives, such as band ratios or SI outputs. Additionally, the model presented within this study could be ‘fine-tuned’ for specific water body typologies.

The results of this study could help broaden and streamline the use of EO imagery for water management, by improving the efficiency of EO processing chains and lowering the skill barrier.

From the obtained results, it was observed that CNNs are capable of outperforming Spectral Indices (SIs) for water segmentation tasks. The CNNs demonstrated an ability to identify contextual features such as boats, turbid water and sediment-rich intertidal water bodies. It was shown in all test cases that re-training the neural network to localised datasets improved prediction accuracy.

Dr Calogero Schillaci, of JRC-ISPRA, states, “Using RGB and Convolutional Neural Networks we can use a rich, six year archive of Copernicus Sentinel-2 images and compare them throughout the globe from year to year - we hope this will be helpful for water monitoring assessment."

About the Copernicus Sentinels

The Copernicus Sentinels are a fleet of dedicated EU-owned satellites, designed to deliver the wealth of data and imagery that are central to the European Union's Copernicus environmental programme.

The European Commission leads and coordinates this programme, to improve the management of the environment, safeguarding lives every day. ESA is in charge of the space component, responsible for developing the family of Copernicus Sentinel satellites on behalf of the European Union and ensuring the flow of data for the Copernicus services, while the operations of the Copernicus Sentinels have been entrusted to ESA and EUMETSAT.

Did you know that?

Earth observation data from the Copernicus Sentinel satellites are fed into the Copernicus Services. First launched in 2012 with the Land Monitoring and Emergency Management services, these services provide free and open support, in six different thematic areas.

The Copernicus Land Monitoring Service (CLMS) provides geographical information on land cover and its changes, land use, vegetation state, water cycle and Earth's surface energy variables to a broad range of users in Europe and across the World, in the field of environmental terrestrial applications.

It supports applications in a variety of domains such as spatial and urban planning, forest management, water management, agriculture and food security, nature conservation and restoration, rural development, ecosystem accounting and mitigation/adaptation to climate change.

Disclaimer: The figures contained in this story are derived from the article International Journal of Remote Sensing, published online on 25 April 2021, © 2021 Informa UK Limited, trading as Taylor & Francis Group.

Bibliography

Beck, B. 1986. “A Generalized Genetic Framework for the Development of Sinkholes and Karst in Florida, U.S.A.” Environmental Geology and Water Sciences 8 (1): 5–18. doi:10.1007/BF02525554.

Belward, A., and J. Skoien. 2015. “Who Launched What, When and Why; Trends in Global Land-cover Observation Capacity from Civilian Earth Observation Satellites.” ISPRS Journal of Photogrammetry and Remote Sensing 103 (103): 115–128. doi:10.1016/j.isprsjprs.2014.03.009.

Chen, L., Papandreou, G., Kokkinos, I., Murphy, K., and A. L. Yuille, “DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 4, pp. 834–848, 1 April 2018, doi:10.1109/TPAMI.2017.2699184

Hoeser, T., and C. Kuenzer. 2020. “Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review-Part I: Evolution and Recent Trends.” Remote Sensing 12 (10): 1667.

Karimi, D., H. Dou, S. Warfield, and A. Gholipour. 2020. “Deep Learning with Noisy Labels: Exploring Techniques and Remedies in Medical Image Analysis.” In Medical Image Analysis.

Isikdogan, F., A. Bovik, and P. Passalacqua. 2017. “Surface Water Mapping by Deep Learning.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 10 (11): 11. doi:10.1109/JSTARS.2017.2735443.

Prabha, R., M. Tom, M. Rothermel, E. Baltsavias, L. Leal-Taixe, and K. Schindler. 2020. “Lake Ice Monitoring With Webcams And Crowd-Sourced Images.” ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci V-2-2020: 549–556. doi:10.5194/isprs-annals-V-2-2020-549-2020.

Jain, P., B. Schoen-Pelan, and R. Ross, 2020. Automatic Flood Detection in SentineI-2 Images Using Deep Convolutional Neural Networks. SAC ’20: Proceedings of the 35th Annual ACM Symposium on Applied Computing, p. 617–623. Brno Czech Republic.

Talal, M., Alavikunhu, P., Husameldin, M., Mansoor, W., Saeed, A,-M, and Hussain A, A. 2018. “Detection of Water-Bodies Using Semantic Segmentation.” Detection of Water-Bodies Using Semantic Segmentation. s.l., 2018 International Conference on Signal Processing and Information Security (ICSPIS).

Tsagkatakis, G., Aidini, A., Fotiadou, K., Giannopoulos, M., Pentari, A, and Tsakalides, P. 2019. “Survey of Deep-Learning Approaches for Remote Sensing Observation Enhancement” Sensors 19 (18): 3929. https://doi.org/10.3390/s19183929

Wang, Y., Z. Li, C. Zeng, G.-S. Xia, and H. Shen. 2020. “An Urban Water Extraction Method Combining Deep Learning and Google Earth Engine.” In IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 13: 769–782. doi:10.1109/JSTARS.2020.2971783.

Wieland, M., and S. Martinis. 2020. “Large-scale Surface Water Change Observed by Sentinel-2 during the 2018 Drought in Germany.” International Journal Of Remote Sensing 41 (12): 4742–4756. doi:10.1080/01431161.2020.1723817